Encoder in the Transformer

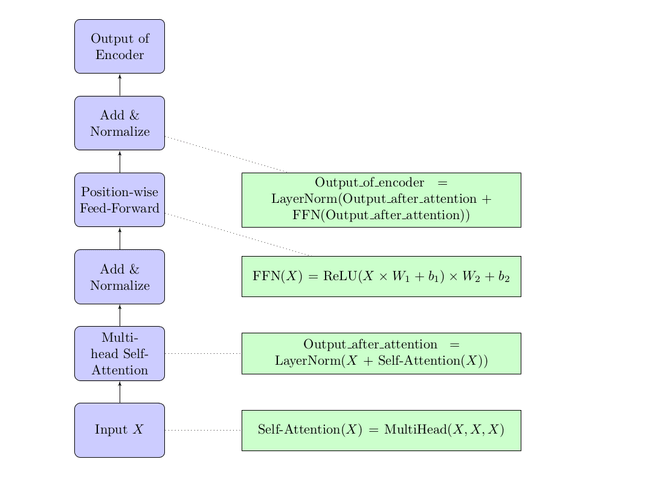

Each encoder in the Transformer consists of two main parts Multihead SelfAttention followed by Add Normalize...

Encoder in the Transformer:🔗

Each encoder in the Transformer consists of two main parts:

-

Multi-head Self-Attention followed by Add & Normalize: This is the self-attention mechanism we discussed earlier, where the input sequence attends to all positions in itself. After the attention scores are computed and used to produce an output, a residual connection (i.e., the "add" operation) is added, and then layer normalization is applied.

-

Position-wise Feed-Forward Networks followed by Add & Normalize: This consists of two linear transformations with a ReLU activation in between. Just like in the self-attention mechanism, after the feed-forward network, a residual connection is added, followed by layer normalization.

- is the matrix after positional encoding.

Equations for the Encoder's Operations:🔗

- Self-Attention:

- Add & Normalize after Self-Attention:

- Feed-Forward:

Assuming the feed-forward network consists of two linear layers with weights and , and biases and , and using ReLU as the activation function, it can be represented as:

- Add & Normalize after Feed-Forward:

So, the output of the encoder, after processing the input matrix (with positional encodings), is . If there are multiple encoder layers in the Transformer, this output will serve as the input for the next encoder layer.

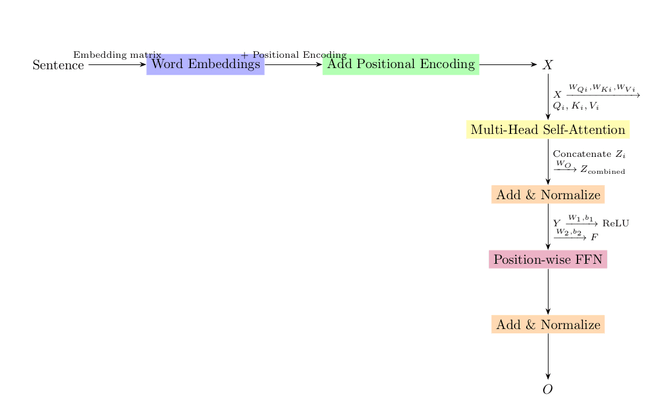

Encoder Process with Parameters:🔗

-

Word Embeddings & Positional Encoding:

-

Multi-Head Self-Attention:

- For each head :

- Self-attention for each head:

- Combine all heads:

-

Add & Normalize after Self-Attention:

-

Position-wise Feed-Forward Network (FFN):

- FFN Parameters: for the first layer and for the second layer.

-

Add & Normalize after FFN:

Where:

- , , and are the weight matrices for computing the Query, Key, and Value for the attention head.

- is the weight matrix for combining the outputs of all attention heads.

- are the weight matrix and bias for the first linear transformation in the FFN.

- are the weight matrix and bias for the second linear transformation in the FFN.

- and are the learned scale and shift parameters for layer normalization.

The final output after one encoder layer is . If there are more encoder layers, would serve as the input for the next encoder layer.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!